Original source publication: Polónia, F. and F. de Sá-Soares (2013). Key Issues in Information Systems Security Management. Proceedings of the 33th International Conference on Information Systems—ICIS 2013. Milan (Italy).

The final publication is available here.

Key Issues in Information Systems Security Management

Universidade do Minho, Centro Algoritmi, Guimarães, Portugal

Abstract

The increasing dependence of organizations on information and the need to protect it from numerous threats justify the organizational activity of information systems security management. Managers responsible for safeguarding information systems assets are confronted with several challenges. From the practitioners’ point of view, those challenges may be understood as the fundamental key issues they must deal with in the course of their professional activities. This research aims to identify and prioritize the key issues that information systems security managers face, or believe they will face, in the near future. The Delphi method combined with Q-sort technique was employed using an initial survey obtained from literature review followed by semi-structured interviews with respondents. A moderate consensus was found after three rounds with a high stability of results between rounds. A ranked list of 26 key issues is presented and discussed. Suggestions for future work are made.

Keywords: Key Issues; Information Systems Security; Information Systems Security Management; Information Systems Management; Delphi; Q-Sort

Introduction

In a fast-changing business environment, where innovative uses of information made possible by new technologies occur at a rapid pace and are accompanied by the emergence of new threats to information assets, information systems (IS) managers face myriad challenges to maintain an adequate IS security (ISS) level and, by extension, to preserve the integrity of organizations and their ability to survive and thrive in the market. From the practitioners’ point of view, those challenges may be understood as the fundamental key issues they deal with in the course of their professional activities.

This study aims to identify and prioritize the key issues that ISS managers face, or believe they will face in 5 to 10 years. Capturing and classifying those issues according to their importance is of value to different stakeholders. Namely, it can assist top level management in the analysis of IS strategic investments; guide vendors in the development of security products; improve IS managers’ understanding of the activities and responsibilities that surround their job; make information security consultants aware of the most important ISS issues in the industry’s reality as sensed by ISS managers; and to suggest potential areas of interest for researchers to inquire deeper.

The paper is organized as follows. First, we review the major studies on key issues conducted in the area of IS. Then, the research design is outlined, followed by the description of the study that was conducted. Afterwards, we present and discuss the main findings of the research. The paper ends by drawing conclusions, identifying limitations, and advancing future work opportunities.

Studies of Key Issues in Information Systems

The field of IS has had a number of studies aimed at identifying key concerns of IT management executives by asking a panel of experts their opinion on a given subject. This tradition of asking a panel to sort their most important concerns began in 1980, in the USA, when the Society for Information Management (SIM) commissioned a survey to uncover the key issues its members were facing. Subsequent studies were conducted at approximately three-year intervals. After a gap of nine years, between 1994 and 2003, the surveys were resumed on an annual basis. Table 1 summarizes those studies. For each study, it is indicated the year of the survey, the number of times (rounds) participants were asked to classify the issues, the number of participants per round, and the number of issues subject to rating.

Table 1: Studies on Information Systems Management Key Issues

The studies on IS management concerns sponsored by SIM were able to identify and prioritize several issues deemed important to IT executives. The periodical promotion of the surveys allowed the speculation on the longitudinal evolution of the key issues, the highlighting of enduring concerns, the consideration of new challenges, and the identification of the passing issues that became no longer relevant.

From a methodological point of view, the studies promoted by the SIM from 1983 to 1994 applied the Delphi method, a common technique used to gather information from a panel, requiring several survey rounds to identify and rank the key issues. The use of successive rounds in a study forces the panel to find a consensus, a consolidated result from the entire panel as a whole on the order of importance of the issues. Some studies combined Delphi with other research methods, such as telephone interviews, enriching the study with a qualitative component. From 2003 to 2011, the SIM Board decided to query respondents in a single round, according to a procedure similar to the one used in the 1980 study.

Research Design

To accomplish the study’s objective, the Delphi method was selected. In the IS field, the popularity of this research strategy is particularly high when key issue classification studies are conducted [Okoli and Pawlowski 2004]. Delphi uses a series of linked questionnaires, usually know as rounds, where participants are continually asked to re-evaluate their answers (to the same questions) in light of the summarized group result of the previous round. That is, after each round, results are summarized, given back to the experts, and a new (usually identical) questionnaire is provided for another evaluation. For this evaluation the expert should take into account the aggregated opinion of the other experts. Three to five questionnaires are usually needed until a consensus among participants is achieved [Delbeq 1986]. Researchers usually apply this method expecting consensus to be reached among participants. If that is not the case, the results may still give important insights, since the Delphi method may identify divergences and contradictions between participants, the analysis of which is as valuable as that done on data from consensus [Procter and Hunt 1994].

Since the aim of the study was not limited to the enumeration of ISS management concerns, but also intended to categorize them according to their level of importance, we asked participants to classify the issues by ranking them via the Q-sort technique. The Q-sort technique consists of a sorting procedure where a set of cards with inscriptions (phrases, words, or figures) is laid down in a pyramid. In this study, for each key issue there was a corresponding card. Q-sort has a set of specific procedures that have to be performed by participants. These procedures start with the familiarization of the subject with all the cards.

At the design stage of this research the possibility of conducting interviews with participants in the Delphi study after the last round was also foreseen. This would add extra value if the analysis of the Delphi results justified the need for collecting qualitative data that would allow a better understanding of the final list of ISS key issues or the final ranking.

The setup of this study involved the following tasks that will be detailed next: selection and invitation of experts to the Delphi panel, decision on the design of rounds, definition of the means of communication with experts, and definition of round-stopping criteria.

Delphi Panel

Since we did not have a prior pool of ISS managers from which we could form the panel, we started by identifying the 500 largest companies in Portugal. To this list we added the top 200 IT companies in Portugal (by revenue). These companies were contacted by email, fax, and telephone. An invitation was sent to collaborate in the study by requesting the name, email address, and phone contacts of the professional responsible for ISS matters in the organization. Public services, hospitals, health care centers, universities, institutes, and colleges were also contacted. Also included were contacts obtained through academic and professional conference programs and workshops, as well as contacts suggested by experts in the area. From this process, 182 experts were identified. A personal letter followed to each of these, inviting them to participate in the first round of the study, by giving their opinion on the importance of each issue.

Design of Rounds

In what concerns the design of rounds, we had to decide whether the first round would be a blank-sheet round, to be used to collect from participants their opinions on what are the key issues in ISS management, or a regular ranking round, requiring the provision of a list of potential key issues that participants would classify. We chose the latter, mainly because we could compose an initial list of issues from literature to reduce the effort required from participants. It was also decided that in the first round participants could add new concerns to the original list of issues compiled. This ensured that the list could be enriched with issues that somehow escaped the scope of literature, giving experts the ability to tweak the list in case they found that the presented issues did not best reflect their reality. The subsequent rounds would be closed (participants would not be able to add new items). This decision also helped to keep the number of rounds within a reasonable limit. An additional decision was to not drop the issues that were considered less important, except if at the end of the first round the number of new concerns eventually suggested by participants would render the next round prohibitive in terms of respondents’ effort.

To collect data, we used a Web tool to administer the surveys. The availability of such a tool gives participants the convenience of answering and reduces the time between rounds, helping the panel to keep the enthusiasm and eliminating time spent on the data transcription phase. The tool implemented the procedures associated with the Q-sort technique, thus ensuring the participants would correctly perform its steps.

Communication Protocol

The communication protocol to be used between the research team and the participants during the Delphi rounds had a set of predefined rules. An email message sent to each participant initiated each round. This message included information about the purpose of the study, by recalling and explaining the research question that would guide the expert’s participation; the goal and design of the round; the period within which it would be open to answers; the Web link and credentials that would enable participation; a guarantee of confidentiality and anonymity; an emphasis on the importance of the expert’s contribution to the success of the study; indication of researchers’ contacts for any unforeseen circumstance; and for the second and subsequent rounds, a brief description of the consensus obtained in the previous round. The evolution of the answers in each round would be closely monitored by researchers. To those participants who had not yet responded, a reminder would be sent by email two days before the closing date of the round and on the day the answering period would end. In case there was evidence of difficulties or unavailability of the participants, the answering period would be extended. Finally, every contact made by participants would be replied to promptly by the research team.

Stop Criteria

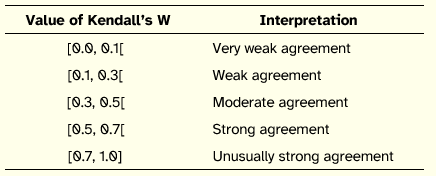

The majority of the Delphi studies that were reviewed define two main criteria for the Delphi rounds to stop. The first one dictates that rounds should stop in the absence of progression of consensus between rounds, or alternatively, when a high consensus degree in one round is achieved. The second consists of a pre-defined maximum number of rounds whose determination is based on the behavior of experts observed on previous studies, independently of the values obtained for consensus. Regarding the first stop criteria, we used the nonparametric tests of Kendall’s coefficient of concordance (W) and Kendall’s rankorder correlation coefficient (T) to evaluate if a high consensus was obtained or if progression between rounds stopped.

Table 2: Interpretation of Kendall’s W

Adapted from Schmidt [1997]

Degree of concordance indicates the level of consensus in the evaluation made by experts, on the same set of key issues, in a given Delphi round. However, it is also necessary to measure the degree of concordance between each evaluation made by the panel as a whole, over time. This last measurement reveals the stability of the panel’s selection throughout the rounds, indicating if the opinions are converging or diverging as a whole. Kendall’s rank-order correlation coefficient (T) was the metric applied to determine this. It outputs a value between -1 (perfect disagreement) and 1 (perfect agreement), with 0 suggesting that the variables involved are independent and therefore unrelated. In this study we expected to see an increase of the positive coefficient over time.

It is advisable to establish a maximum number of rounds, with Procter and Hunt [1994] observing that two or three rounds are the usual limit. A high number of rounds may have negative consequences as there is a high probability of exhaustion of the panel [Marsden et al. 2003]. Hence, in this study we established that the maximum number of rounds would be three, independently from the results.

To sum up, in the present study, the stop criteria relied on two different conditions, being the rounds interrupted in case of one round presenting a high consensus value that has not progressed from the preceding round, or, in the absence of this condition, at the third round.

Description of the Study

The study involved the elaboration of the initial list of ISS key issues, the administration of surveys over three Delphi rounds, and a final interview to a subset of respondents to the last two rounds.

Development of the ISS Key Issues List

Following the decision to not start the Delphi study with a blank-sheet round, we had first to develop a list of key issues in the area of ISS that would be presented to participants in the initial Delphi round. We developed this list of issues from the literature. Magazines, journals, books, and conference proceedings in the area of interest are the most widely used resources for building such a list. In this technique, a time limit needs to be defined to narrow the literature analysis, that is, all sources would be scrutinized, from a given year to the present date. This decision was made considering the aim of the study, namely that the key issues should illustrate the main concerns of ISS managers. Therefore, the literature should reflect issues that are (or will be) important, among them issues that are constantly present in this area of knowledge. Both new and resilient issues can be found in recent publications as they are either a novelty, or a constant concern; thus, going back to older publications will only add issues that are no longer current.

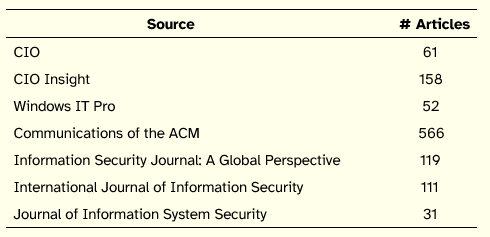

Several magazines and journals in the areas of IS management and ISS were selected to be analyzed in order to discover the issues currently being discussed. All issues of the following publications from January 2005 were part of this analysis, namely: CIO, CIO Insight, Windows IT Pro, Communications of the ACM, Information Security Journal: A Global Perspective, International Journal of Information Security, and Journal of Information System Security. These sources combined publications marketed towards practitioners and those marketed towards academics, and overlapped security issues with top management issues. The total number of articles analyzed amounted to 1098, distributed by source as illustrated in Table 3.

Table 3: Number of Articles Analyzed by Source

Many of the articles discussed concerns that were beyond the scope of this investigation or did not add any key issue at all, so they were discarded. To arrive at the final list of issues, we identified, aggregated, and described those issues. An iterative approach was used in which new keywords were added to a list and described in context. The list of keywords and their contexts were analyzed in order to interpret their purpose. From this step we obtained a list of issues pertinent to ISS Management. Finally, we needed to ensure that the list was solid in terms of content. A small group of information security experts from the industry participated in an initial validation of the list before starting the Delphi rounds. Nonetheless, in the first round of the Delphi all participants were asked to validate and suggest changes to the list of issues, in case they felt that the issues described were not representative of their reality. From the process of literature analysis and consolidation of potential ISS concerns resulted a list of 25 key issues that formed the first survey presented to the Delphi panel (cf. Appendix A).

Delphi Rounds

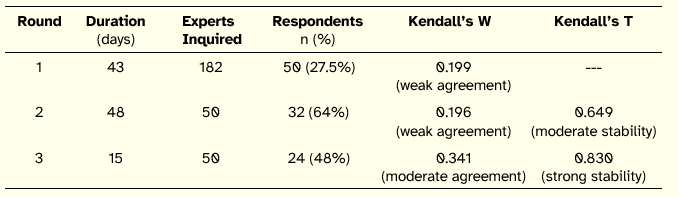

The Delphi study involved three rounds. Table 4 summarizes the rounds in terms of duration (number of days that the round was open), number of experts inquired in each round, number of respondents and corresponding response rate per round, and Kendall’s W and T values.

Table 4: Summary of Delphi Rounds

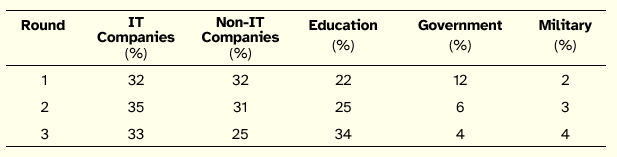

Table 5 illustrates the distribution of respondents by industry through the Delphi rounds. This distribution can be considered stable during the rounds and does not compromise the heterogeneity of the panel over time or the direct comparative analysis.

Table 5: Distribution of Respondents by Industry through Delphi Rounds

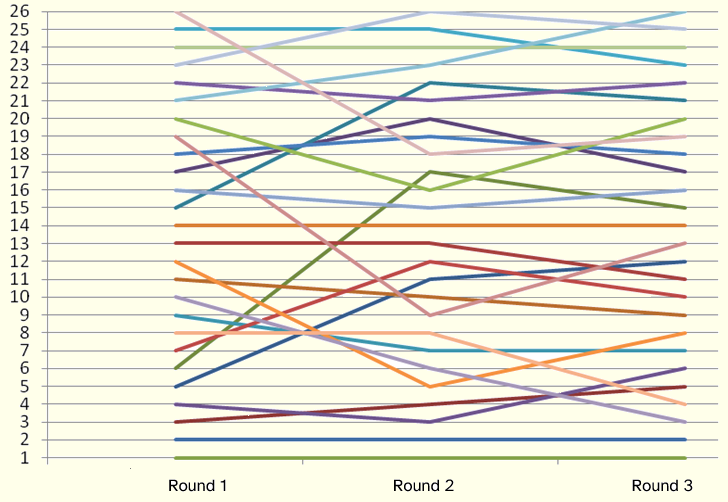

Figure 1 depicts the evolution of the rank of each key issue throughout the rounds (for the discussion ahead, it is not necessary to be aware of the correspondence between each line and a given key issue). The evolution of each key issue rank throughout the three Delphi rounds can be found in Appendix A. It should be noted that on the first round the issues were considered individually by the experts. However, for subsequent rounds, experts had the information from the previous round, explaining the strong variation of some issues between the first two rounds. It can be seen, however, that the steepness of the lines diminishes between the second and third rounds, evidencing the increased agreement between the

respondents (less adjustments were needed).

Figure 1. Evolution of Key Issues through Delphi Rounds

Interviews

After completing the Delphi rounds, we considered it relevant to add qualitative data to the study. All respondents to the second and third rounds were contacted and invited to comment on the results and to assess the study. Fifteen participants made themselves available, representing about 63% of the respondents of the third round and 30% of the respondents of the second round. The average work experience in the area of ISS of these interviewed professionals was 11.6 years.

This fourth stage of research consisted of semi-structured interviews with members of the panel. These interviews were done in loco with trips of the first author to the organizations in which the experts were operating. With one exception, all interviews were audio-taped so that the researcher could concentrate on the conversation and not on the task of collecting notes.

The interviews aimed to determine the specific reasoning underlying the experts’ responses. Due to time constraints, the interviews focused only on the motivations associated with the key issues that were chosen by the respondent as the top 3 most important issues and the bottom 3 least important issues. The experts were also queried on their opinion about the relevance of the study. Without exception they highlighted the difficulty in sorting some of the issues, noting also the timeliness and up-to-date quality of the survey, asserting that it summarizes the concerns they face or expect to face in 5 to 10 years, regarding ISS Management.

Analysis

In this section we analyze the study in terms of response rate, level of consensus achieved, possibility of random answers by participants, possibility of clustering respondents, and content of interviews.

Response Rate

The response rate for the first round (27.5%) is acceptable since there was no previous agreement with the experts to participate in the study. This represents 50 experts that participated in the first round. Usually, the response rate for Delphi studies is between 40% and 50% [Linstone and Turoff 1975]. Our subsequent rounds achieved a response rate of 64% and 48%.

Consensus

Qualitatively, for the first two rounds, using the interpretation proposed by Schmidt [1997], a weak agreement was found between panel members. For the third round, there was moderate consensus. Additionally, there was a growing stability between the first and second rounds, and between the second and third rounds (T took values of 0.649 and 0.830, respectively). This longitudinal stability reveals the progression of the result, i.e., the increasing of the correlation between rounds. These calculations can be observed in Figure 1, where for 24 of the 26 issues, the slope (in absolute value) of the lines of progression between Round 1 and Round 2 is superior to the slope between Round 2 and Round 3 for each key issue. This simple analysis shows that there was less need to make adjustments between the second and third rounds compared to the necessary adjustments between the first and second rounds.

Possibility of Random Answers

To judge the likelihood of random answers, an analysis was made between the responses of experts in a given round and their own responses in the precedent round as well as the group’s response. The metric used was Kendall’s T. It was found that there is some level of agreement between the responses of each expert in a specific round and the response of the group for the round that preceded it. These observations rule out the possibility that random answers might have been given. It can also be said that the response of each expert is somewhat changeable over time, suggesting a review of the individual ranking against the preceding panel ranking, as is normally expected in Delphi studies.

Possibility of Clustering

Interviews

The different realities of the experts, their businesses, the markets in which their organizations operate, and the responsibilities they face provide different interpretations to the same key issue. Although there was a description for each issue, the experience of each expert provided a background against which issues were interpreted from the perspective of their own businesses.

The interviews revealed that 14 out of 15 experts explained their choices based on the alignment with their businesses, with experts keeping in mind the importance of continuity as well as the usefulness of security in the context in which their businesses operate. With one exception, all experts agreed that the answers given were based on the intense consideration of their professional realities. The interpretation of each question in the light of their reality determined the relative ranking of the issues. Some experts mentioned that certain aspects do not apply to all businesses and are dependent on the perception of different organizational cultures. This suggests that the information security culture of the organization and its maturity level in terms of information systems security management may play an important role in prioritizing ISS concerns.

Discussion

The ordered list does not show a logical grouping on the nature of the issues. In fact, the list is quite sparse in that matter: there are issues pertaining to different types of controls (technical, formal, informal, and regulatory), as well as issues related to different classes of controls (directing, structuring, learning, preventing, detecting, and reacting). Therefore, we chose to focus the discussion in the top and bottom five key issues of the ranked list and their connection with what was found in the interviews.

The contractual liability is neglected since it is unrealistic in the Portuguese legal system, with the experts stating that the company would collapse before being able to recover any loss due to the installation or operation of a product or service. Certificates are seen as mere symbols to create organizational image that are not necessary to have in order to meet the requirements demanded by those same certifications. Interviewees also added that a number of procedures on these certifications do not apply to some businesses or even impair the functioning of said business, making their business processes slow or hindering their flow without any return. The issue that focuses on the justification of investments at first seems contradictory, yet such is not the case in light of the interpretation provided by the vast majority of the experts interviewed. If the investment is aligned with business, the justification is not considered necessary since it is the very requirement for top management to meet its strategic objectives. On the other hand, if the investment is misaligned with business, then it is wrong to try to justify it, as those investment requirements are unfounded, since they are not really necessary. The fact that this issue showed a lower priority may suggest that the majority of the panel members are part of organizations whose management has strategic perceptions and concerns about ISS. Finally, the integration of new procedures and products has relative importance, that is, if it is necessary for the business then it is covered by the alignment (which is a priority); if it is not needed, then there is no justification for integrating new procedures or products just because they are being marketed. We think that these first and last five issues describe very well the reasoning and the grounding structure for answering the research question that guided this study.

Conclusion

As the dependence of organizations on information grows so does the need to protect IS assets. Professionals who are in charge of the management of IS protection face a set of concerns which this research considered important to identify and prioritize. Accordingly, a number of concerns were found as well as their respective ordering and consolidation. In addition, a qualitative interpretation was provided to identify the reasons that support the importance given to each concern.

Limitations

As with any study, this one also presents several limitations. The first limitation relates to the way the survey was designed. Although the survey went through iterative revisions and the first Delphi round was open to the introduction of new issues, some questions may comprise a larger scope than others. Validation during interviews mitigated this possibility, but it did not eliminate it altogether. A second limitation results from the subjective interpretation of key issues by experts. Although the descriptions associated with each of the key issues were provided to lessen this factor, there still may be room for differences of interpretation. Subjectivity in this study was assessed using the Q-sort technique. This technique has limitations, including forcing a classification of issues without ties, preventing an expert to classify two issues at the same level of importance. The third limitation we identified is also a requirement of the study: the heterogeneity of the panel. Although this condition was necessary for answering the research question, heterogeneous panels tend to converge more slowly to consensus. The last limitation relates to the study being geographically confined to Portugal, which limits the extrapolation of results to other settings, as other cultures may classify issues from a different perspective.

Future Work

Contributions

Aware of the limitations inherent to any study, with the data obtained during the Delphi rounds and in the interviews to clarify the motivations of experts, we argue that the key issues that ISS managers currently face are outlined in Appendix A, although their priorities depend on the business and on the alignment of ISS with the business strategy. Furthermore, we claim that despite its limitations, the expected contributions of the study were achieved. The ordered list provides a solid contribution to the understanding of the key aspects that rule the ISS manager’s job and may be useful for different stakeholders. Besides being helpful in the analysis of IS strategic investments by top management, whether related to protocols, products, or human resources across industries, the list of issues may assist in the dialog between IS managers and ISS managers, contributing to mutual understanding of responsibilities and priorities. The consideration of the ranked list of issues may prompt ISS developers and consultants to evaluate their current offers in terms of products and services, as well as to gain a better understanding of why some issues are devalued by current ISS managers. In a similar vein, ISS researchers may find potential areas of research where deeper investigation and new perspectives may be justified, such as demonstrating what the value of ISS, having more sophisticated and comprehensive detection techniques, why behavioral controls need to improve their effectiveness, or how to expand the benefits of ISS certification for organizations.

Acknowledgments

This work is funded by FEDER funds through Programa Operacional Fatores de Competitividade—COMPETE and National funds by FCT—Fundação para a Ciência e Tecnologia under Project FCOMP-01-0124-FEDER-022674.

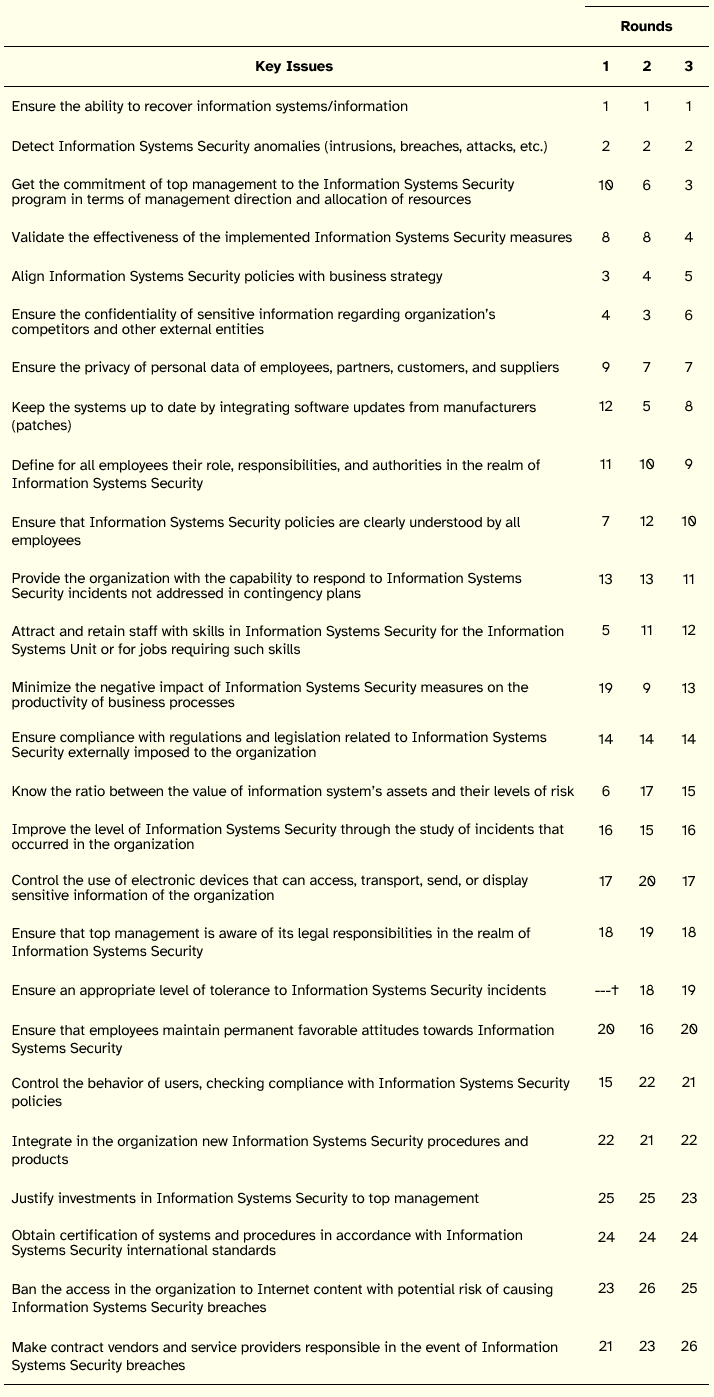

Appendix A—Results by Delphi Rounds

Issues sorted according to the final ranking.

† Issue suggested by one participant in the first round.

References

Ball, L. and R. Harris (1982). SMIS Members: A Membership Analysis. MIS Quarterly 6(1), 19–38.

Beretta, R. (1996). A critical view of the Delphi technique. Nurse Researcher 3(4), 79–89.

Brancheau, J. and J. Wetherbe (1987). Key Issues in Information Systems Management. MIS Quarterly 11(1), 23–45.

Brancheau, J., B. D. Janz and J. C. Wetherbe (1996). Key Issues in Information Systems Management: 1994-95 SIM Delphi Results. MIS Quarterly 20(2), 225–242.

Delbeq, A. L. (1986). Group Techniques for Program Planning: A Guide to Nominal Group and Delphi Processes. Wisconsin: Green Briar Press.

Dickson, G. W., R. L. Leitheiser, J. C. Wetherbe and M. Nechis (1984). Key Information Systems Issues for the 1980’s. MIS Quarterly 8(3), 135–159.

Duffield, C. (1993). The Delphi technique: a comparison of results obtained using two expert panels. International Journal of Nursing Studies 30(3), 227–237.

Linstone, H. A. and M. Turoff (Eds.) (1975). The Delphi Method: Techniques and Applications. Massachusetts: Addison Wesley.

Luftman, J. (2005). Key Issues for IT Executives 2004. MIS Quarterly Executive 4(2), 269–285.

Luftman, J. and T. Ben-Zvi (2010a). Key Issues for IT Executives 2009: Difficult Economy’s Impact. MIS Quarterly Executive 9(1), 49–59.

Luftman, J. and T. Ben-Zvi (2010b). Key Issues for IT Executives 2010: Judicious IT Investments Continue Post-Recession. MIS Quarterly Executive 9(1), 263–273.

Luftman, J., and T. Ben-Zvi (2011). Key Issues for IT Executives 2011: Cautious Optimism in Uncertain Economic Times. MIS Quarterly Executive 10(4), 203–212.

Luftman, J. and B. Derksen (2012). Key Issues for IT Executives 2012: Doing More with Less. MIS Quarterly Executive 11(4), 207–218.

Luftman, J. and R. Kempaiah (2008). Key Issues for IT Executives 2007. MIS Quarterly Executive 7(2), 99–112.

Luftman, J. and E. R. McLean (2004). Key Issues for IT Executives. MIS Quarterly Executive 3(2), 89–104.

Luftman, J., R, Kempaiah and E. Nash (2006). Key Issues for IT Executives 2005. MIS Quarterly Executive 5(2), 81–99.

Luftman, J., R. Kempaiah and E. H. Rigoni (2009). Key Issues for IT Executives 2008. MIS Quarterly Executive 8(3), 151–159.

Marsden, J., B. Dolan and L. Holt (2003). Nurse practitioner practice and deployment: electronic mail Delphi study. Journal of Advanced Nursing 43(6), 595–605.

Martino, J. P. (1972). Technological Forecasting for Decision Making. New York: American Elsevier Publishing Co.

Niederman, J., J. C. Brancheau and J. C. Wetherbe (1991). Information Systems Management Issues for the 1990’s. MIS Quarterly 15(4), 475–500.

Okoli, C. and S. D. Pawlowski (2004). The Delphi method as a research tool: an example, design considerations and applications. Information & Management 42(1), 15–29.

Parker, D. B. (1998). Fighting Computer Crime: A New Framework for Protecting Information. New York: John Wiley & Sons.

Preble, J. (1984). The Selection of Delphi Panels for Strategic Planning Purposes. Strategic Management Journal 5(2), 157–170.

Procter, S. and M. Hunt (1994). Using the Delphi survey technique to develop a professional definition of nursing for analysing nursing workload. Journal of Advanced Nursing 19(5), 1003–1014.

Schmidt, R. C. (1997). Managing Delphi surveys using nonparametric statistical techniques. Decision Sciences 28(3), 763–774.

Westerman, G. and R. Hunter (2007). IT Risk: Turning Business Threats into Competitive Advantage. Boston: Harvard Business School Press.